Projects

More soon

Publications

About

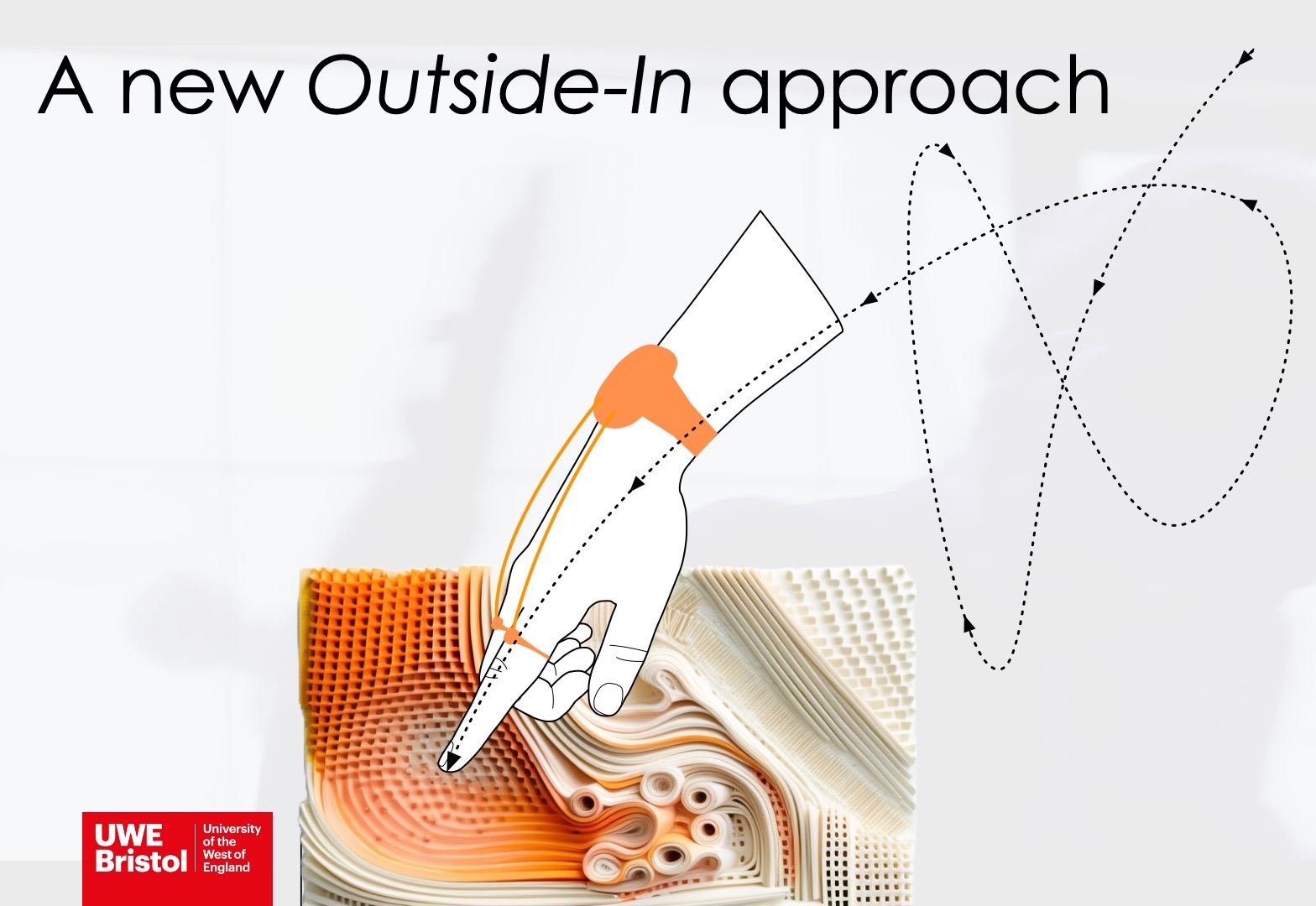

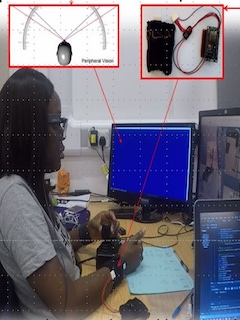

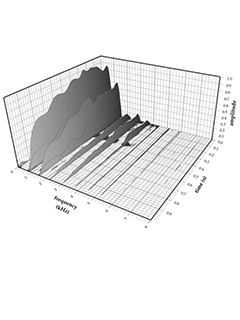

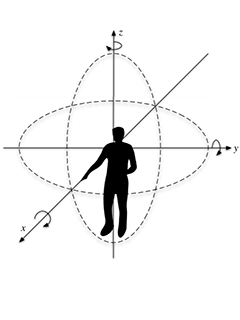

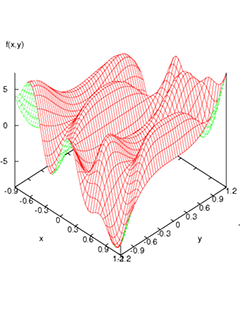

Hi, I'm Tom (he/him) and I am a Professor of Audio and Music Interaction in the Department of Computing and Creative Technologies at UWE, Bristol. I am also a UKRI Future Leaders Fellow working on the project “Sensing Music Interactions from the Outside-In”. For more information see project website: www.micalab.org.

Hi, I'm Tom (he/him) and I am a Professor of Audio and Music Interaction in the Department of Computing and Creative Technologies at UWE, Bristol. I am also a UKRI Future Leaders Fellow working on the project “Sensing Music Interactions from the Outside-In”. For more information see project website: www.micalab.org.

I lead the Creative Technologies Laboratory and co-lead the Bridge: a £3M creative technology facility at UWE, which is funded by the AHRC and WECA. I am also an associate member of the Computer Science Research Centre and Bristol Robotics Laboratory, and resident at the Watershed's amazing Pervasive Media Studio.

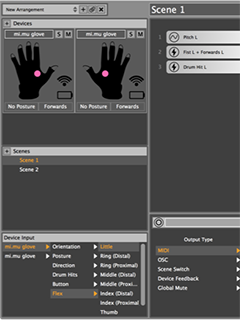

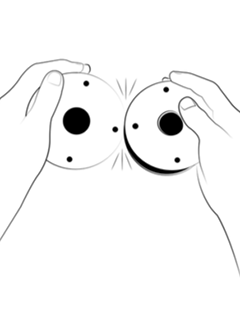

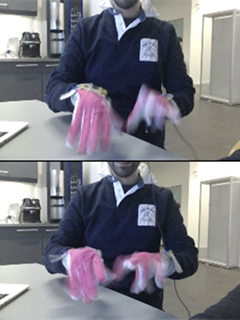

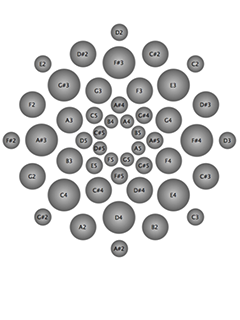

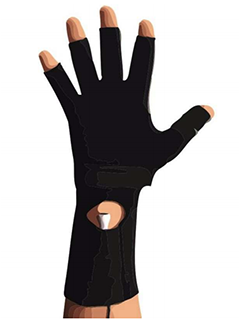

I am a co-founder of mi.mu gloves, a technology company who enable musicians to compose and perform through movement and gesture, a project that began as a research collaboration between the inimitable Imogen Heap and myself back in 2011.

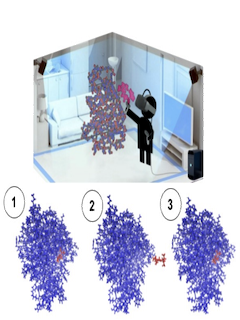

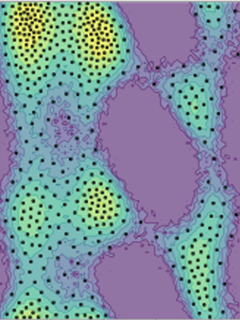

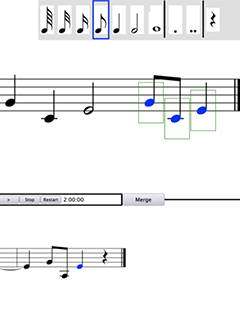

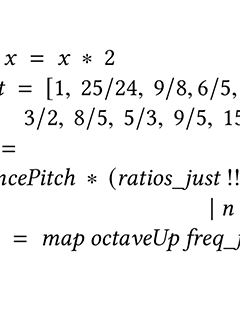

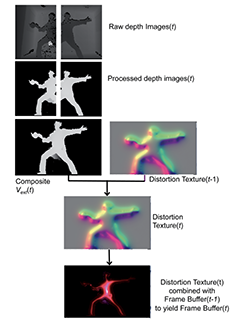

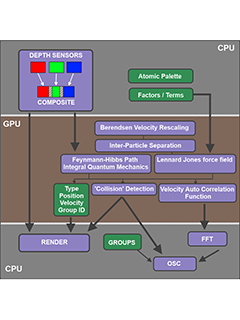

I am also an experienced software developer, C++ programmer and I am very familiar with the Juce library. Over the years I have contributed to a range of interactive media and audio projects for Tracktion, Spitfire Audio, May Productions, Interactive Scientific, x-io Technologies, mi.mu gloves, and Phona, to name a few. I have also worked on a range of interdisciplinary research projects combining art and science including Soma, danceroom Spectroscopy and Transmission.